NATURAL LANGUAGE PROCESSING(NLP)

Natural Language Processing (NLP) is a field in Artificial Intelligence, and is also related to linguistics. On a high level, the goal of NLP is to program computers to automatically understand human languages, and also to automatically write/speak in human languages. We say “Natural Language” to mean human language, and to indicate that we are not talking about computer (programming) languages.

Structured Data Vs Unstructured Data

Structured data is data that has been organized into a formatted repository, typically a database, so that its elements can be made addressable for more effective processing and analysis. For the most part, structured data refers to information with a high degree of organization.

Unstructured data represents any data that does not have a recognizable structure. It is unorganized and raw and can be non-textual or textual. For example, email is a fine illustration of unstructured textual data. It includes time, date, recipient and sender details and subject, etc., but an email body remains unstructured.

Today, with digitization of everything, 80% of the data being created is unstructured. Audio, video, our social footprints, the data generated from conversations between customer service reps, tons of legal documents, and texts processed in financial sectors are examples of unstructured data. Organizations are turning to natural language processing (NLP) technology to derive understanding from the myriad unstructured data available online, in call logs, and in other sources.

Applications of NLP

Here are a few real world usages of NLP today:

1) Spell check functionality in Microsoft Word is the most basic and well-known application.

2) Text analysis, also known as sentiment analytics, is a key use of NLP. Businesses can use it to learn how their customers feel emotionally and use that data to improve their service.

3) By using email filters to analyze the emails that flow through their servers, email providers can use Naive Bayes spam filtering to calculate the likelihood that an email is spam based its content.

4) Call center representatives often hear the same, specific complaints, questions, and problems from customers. Mining this data for sentiment can produce incredibly actionable intelligence that can be applied to product placement, messaging, design, or a range of other uses.

5) Google, Bing, and other search systems use NLP to extract terms from text to populate their indexes and parse search queries.

6) Google Translate applies machine translation technologies in not only translating words, but also in understanding the meaning of sentences to improve translations.

7) Financial markets use NLP by taking plain-text announcements and extracting the relevant info in a format that can be factored into making algorithmic trading decisions. For example, news of a merger between companies can have a big impact on trading decisions, and the speed at which the particulars of the merger (e.g., players, prices, who acquires who) can be incorporated into a trading algorithm can have profit implications in the millions of dollars.

8) Nowadays NLP is widely used in chatbot development

NATURAL LANGUAGE TOOLKIT (NLTK)

The NLTK module is a massive tool kit, aimed at helping you with the entire Natural Language Processing (NLP) methodology. NLTK will aid you with everything from splitting sentences from paragraphs, splitting up words, recognizing the part of speech of those words, highlighting the main subjects, and then even with helping your machine to understand what the text is all about.In our path to learning how to do sentiment analysis with NLTK, we’re going to learn the following:

- Installation of NLTK

- Basic of NLTK ( Corpus, Lexicon, Token)

- Tokenization

- Stop Words

- Stemming words

- Parts of speech tagging

- Chunking

- Chinking

- Name Identity Recognition

- Lemmatizing

- Corpora

- Wordnet

- Text Classification

- Converting words to features

- Bag of words

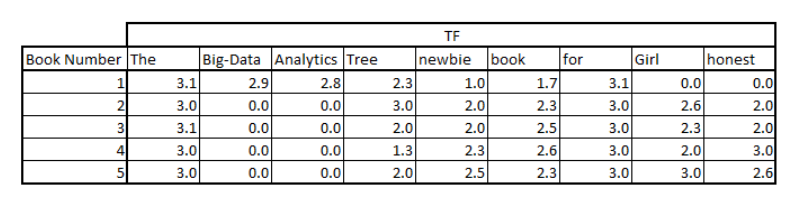

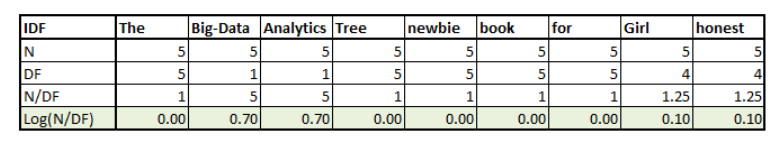

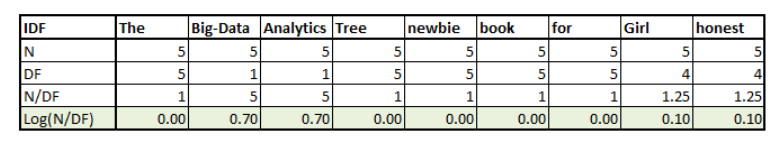

- Document Term Matrix

- Word Embedding

- Text matching/Similarity

1. INSTALLATION OF NLTK

In order to get started, you are going to need the NLTK module, as well as Python. If you do not have Python yet, go to Python.org and download the latest version of Python if you are on Windows. If you are on Mac or Linux, you should be able to run an apt-get install python3

Next, you’re going to need NLTK 3. The easiest method to installing the NLTK module is going to be with pip.

For all users, that is done by opening up cmd.exe, bash, or whatever shell you use and typing: pip install nltk

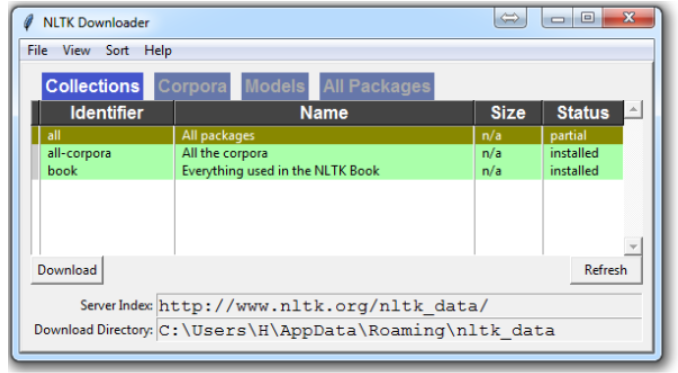

Next, we need to install some of the components for NLTK. Open python via whatever means you normally do, and type:

import nltk

nltk.download()

Note: Choose to download “all” for all packages, and then click ‘download.’ This will give you all of the tokenizers, chunkers, other algorithms, and all of the corpora. If space is an issue, you can elect to selectively download everything manually. The NLTK module will take up about 7MB, and the entire nltk_data directory will take up about 1.8GB, which includes your chunkers, parsers, and the corpora

2. BASICS OF NLTK ( Corpus, Lexicon, Token)

Now that you have all the things that you need, let’s knock out some quick vocabulary:

Corpus – Corpus basically means a body, and in the context of Natural Language Processing (NLP), it means a body of text.

Corpora – It is the plural for corpus.

Lexicon – Words and their meanings. Example: English dictionary. Consider, however, that various fields will have different lexicons. For example: To a financial investor, the first meaning for the word “Bull” is someone who is confident about the market, as compared to the common English lexicon, where the first meaning for the word “Bull” is an animal . As such there is a special lexicon for financial investors, doctors, children, mechanics, and so on.

Token – Each “entity” that is a part of whatever was split up based on rules. For examples, each word is a token when a sentence is “tokenized” into words. Each sentence can also be a token, if you tokenized the sentences out of a paragraph.

3. TOKENIZATION

Example of how one might actually tokenize something into tokens with the NLTK module:

from nltk.tokenize import sent_tokenize, word_tokenize

EXAMPLE_TEXT = "Hello Mr. Smith, how are you doing today? The weather is great, and Python is awesome. The sky is pinkish-blue. You shouldn't eat cardboard."

print(sent_tokenize(EXAMPLE_TEXT))['Hello Mr. Smith, how are you doing today?', 'The weather is great, and Python is awesome.', 'The sky is pinkish-blue.', "You shouldn't eat cardboard."]The above code will output the sentences, split up into a list of sentences, which you can do things like iterate through with a for loop. [‘Hello Mr. Smith, how are you doing today?’, ‘The weather is great, and Python is awesome.’, ‘The sky is pinkish-blue.’, “You shouldn’t eat cardboard.”]

So there, we have created tokens, which are sentences. Let’s tokenize by word instead this time:

print(word_tokenize(EXAMPLE_TEXT))['Hello', 'Mr.', 'Smith', ',', 'how', 'are', 'you', 'doing', 'today', '?', 'The', 'weather', 'is', 'great', ',', 'and', 'Python', 'is', 'awesome', '.', 'The', 'sky', 'is', 'pinkish-blue', '.', 'You', 'should', "n't", 'eat', 'cardboard', '.']There are a few things to note here. First, notice that punctuation is treated as a separate token. Also, notice the separation of the word “shouldn’t” into “should” and “n’t.” Finally, notice that “pinkish-blue” is indeed treated like the “one word” it was meant to be turned into. Pretty cool!

Now, looking at these tokenized words, we have to begin thinking about what our next step might be. We start to ponder about how might we derive meaning by looking at these words. We can clearly think of ways to put value to many words, but we also see a few words that are basically worthless.

4. STOP WORDS

The idea of Natural Language Processing is to do some form of analysis, or processing, where the machine can understand, at least to some level, what the text means, says, or implies.

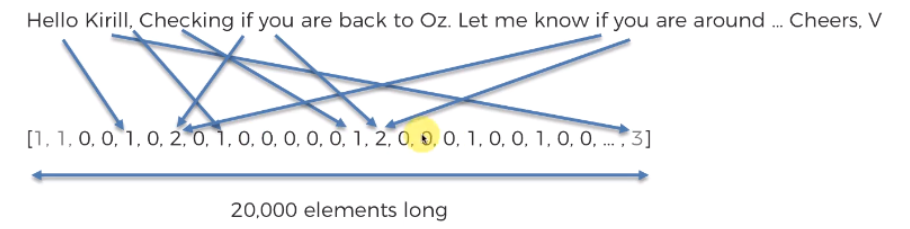

This is an obviously massive challenge, but there are steps to doing it that anyone can follow. The main idea, however, is that computers simply do not, and will not, ever understand words directly.In humans, memory is broken down into electrical signals in the brain, in the form of neural groups that fire in patterns. There is a lot about the brain that remains unknown, but, the more we break down the human brain to the basic elements, we find out basic the elements really are. Well, it turns out computers store information in a very similar way! We need a way to get as close to that as possible if we’re going to mimic how humans read and understand text. Generally, computers use numbers for everything, but we often see directly in programming where we use binary signals (True or False, which directly translate to 1 or 0, which originates directly from either the presence of an electrical signal (True, 1), or not (False, 0)). To do this, we need a way to convert words to values, in numbers, or signal patterns. The process of converting data to something a computer can understand is referred to as “pre-processing.” One of the major forms of pre-processing is going to be filtering out useless data. In natural language processing, useless words (data), are referred to as stop words.

Immediately, we can recognize ourselves that some words carry more meaning than other words. We can also see that some words are just plain useless, and are filler words. We use them in the English language, for example, to sort of “fluff” up the sentence so it is not so strange sounding. An example of one of the most common, unofficial, useless words is the phrase “umm.” People stuff in “umm” frequently, some more than others. This word means nothing, unless of course we’re searching for someone who is maybe lacking confidence, is confused, or hasn’t practiced much speaking. We all do it, you can hear me saying “umm” or “uhh” in the videos plenty of …uh … times. For most analysis, these words are useless.

We would not want these words taking up space in our database, or taking up valuable processing time. As such, we call these words “stop words” because they are useless, and we wish to do nothing with them. Another version of the term “stop words” can be more literal: Words we stop on.

For example, you may wish to completely cease analysis if you detect words that are commonly used sarcastically, and stop immediately. Sarcastic words, or phrases are going to vary by lexicon and corpus. For now, we’ll be considering stop words as words that just contain no meaning, and we want to remove them.

You can do this easily, by storing a list of words that you consider to be stop words. NLTK starts you off with a bunch of words that they consider to be stop words, you can access it via the NLTK corpus with:

from nltk.corpus import stopwordsfrom nltk.corpus import stopwords

from nltk.tokenize import word_tokenize

example_sent = "This is a sample sentence, showing off the stop words filtration."

stop_words = set(stopwords.words('english'))

word_tokens = word_tokenize(example_sent)

filtered_sentence = [w for w in word_tokens if not w in stop_words]

filtered_sentence = []

for w in word_tokens:

if w not in stop_words:

filtered_sentence.append(w)

print(word_tokens)

print(filtered_sentence)['This', 'is', 'a', 'sample', 'sentence', ',', 'showing', 'off', 'the', 'stop', 'words', 'filtration', '.']

['This', 'sample', 'sentence', ',', 'showing', 'stop', 'words', 'filtration', '.']5. STEMMING

The idea of stemming is a sort of normalizing method. Many variations of words carry the same meaning, other than when tense is involved.

The reason why we stem is to shorten the lookup, and normalize sentences.

Consider:

I was taking a ride in the car. I was riding in the car. This sentence means the same thing. in the car is the same. I was is the same. the ing denotes a clear past-tense in both cases, so is it truly necessary to differentiate between ride and riding, in the case of just trying to figure out the meaning of what this past-tense activity was?

No, not really.

This is just one minor example, but imagine every word in the English language, every possible tense and affix you can put on a word. Having individual dictionary entries per version would be highly redundant and inefficient, especially since, once we convert to numbers, the “value” is going to be identical.

One of the most popular stemming algorithms is the Porter stemmer, which has been around since 1979.

First, we’re going to grab and define our stemmer:

from nltk.stem import PorterStemmer

from nltk.tokenize import sent_tokenize, word_tokenize

ps = PorterStemmer()example_words = ["python","pythoner","pythoning","pythoned","pythonly"]Above we chose some words with a similar stem. Next, we can easily stem by doing something like:

for w in example_words:

print(ps.stem(w))python

python

python

python

pythonliNow let’s try stemming a typical sentence, rather than some words:

new_text = "It is important to by very pythonly while you are pythoning with python. All pythoners have pythoned poorly at least once."words = word_tokenize(new_text)

for w in words:

print(ps.stem(w))It

is

import

to

by

veri

pythonli

while

you

are

python

with

python

.

all

python

have

python

poorli

at

least

onc6. PARTS OF SPEECH TAGGING

One of the more powerful aspects of the NLTK module is the Part of Speech tagging that it can do for you. This means labeling words in a sentence as nouns, adjectives, verbs…etc. Even more impressive, it also labels by tense, and more. Here’s a list of the tags, what they mean, and some examples:

POS tag list:

CC coordinating conjunction

CD cardinal digit

DT determiner

EX existential there (like: "there is" ... think of it like "there exists")

FW foreign word

IN preposition/subordinating conjunction

JJ adjective 'big'

JJR adjective, comparative 'bigger'

JJS adjective, superlative 'biggest'

LS list marker 1)

MD modal could, will

NN noun, singular 'desk'

NNS noun plural 'desks'

NNP proper noun, singular 'Harrison'

NNPS proper noun, plural 'Americans'

PDT predeterminer 'all the kids'

POS possessive ending parent\'s

PRP personal pronoun I, he, she

PRP$ possessive pronoun my, his, hers

RB adverb very, silently,

RBR adverb, comparative better

RBS adverb, superlative best

RP particle give up

TO to go 'to' the store.

UH interjection errrrrrrrm

VB verb, base form take

VBD verb, past tense took

VBG verb, gerund/present participle taking

VBN verb, past participle taken

VBP verb, sing. present, non-3d take

VBZ verb, 3rd person sing. present takes

WDT wh-determiner which

WP wh-pronoun who, what

WP$ possessive wh-pronoun whose

WRB wh-abverb where, whenHow might we use this? While we’re at it, we’re going to cover a new sentence tokenizer, called the PunktSentenceTokenizer. This tokenizer is capable of unsupervised machine learning, so you can actually train it on any body of text that you use. First, let’s get some imports out of the way that we’re going to use:

import nltk

from nltk.corpus import state_union

from nltk.tokenize import PunktSentenceTokenizerNow, let’s create our training and testing data

train_text = state_union.raw("2005-GWBush.txt")

sample_text = state_union.raw("2006-GWBush.txt")One is a State of the Union address from 2005, and the other is from 2006 from past President George W. Bush.

Next, we can train the Punkt tokenizer like:

custom_sent_tokenizer = PunktSentenceTokenizer(train_text)Then we can actually tokenize, using:

tokenized = custom_sent_tokenizer.tokenize(sample_text)Now we can finish up this part of speech tagging script by creating a function that will run through and tag all of the parts of speech per sentence like so:

def process_content():

try:

for i in tokenized[:5]:

words = nltk.word_tokenize(i)

tagged = nltk.pos_tag(words)

print(tagged)

except Exception as e:

print(str(e))

process_content()[('PRESIDENT', 'NNP'), ('GEORGE', 'NNP'), ('W.', 'NNP'), ('BUSH', 'NNP'), ("'S", 'POS'), ('ADDRESS', 'NNP'), ('BEFORE', 'IN'), ('A', 'NNP'), ('JOINT', 'NNP'), ('SESSION', 'NNP'), ('OF', 'IN'), ('THE', 'NNP'), ('CONGRESS', 'NNP'), ('ON', 'NNP'), ('THE', 'NNP'), ('STATE', 'NNP'), ('OF', 'IN'), ('THE', 'NNP'), ('UNION', 'NNP'), ('January', 'NNP'), ('31', 'CD'), (',', ','), ('2006', 'CD'), ('THE', 'NNP'), ('PRESIDENT', 'NNP'), (':', ':'), ('Thank', 'NNP'), ('you', 'PRP'), ('all', 'DT'), ('.', '.')]

[('Mr.', 'NNP'), ('Speaker', 'NNP'), (',', ','), ('Vice', 'NNP'), ('President', 'NNP'), ('Cheney', 'NNP'), (',', ','), ('members', 'NNS'), ('of', 'IN'), ('Congress', 'NNP'), (',', ','), ('members', 'NNS'), ('of', 'IN'), ('the', 'DT'), ('Supreme', 'NNP'), ('Court', 'NNP'), ('and', 'CC'), ('diplomatic', 'JJ'), ('corps', 'NN'), (',', ','), ('distinguished', 'JJ'), ('guests', 'NNS'), (',', ','), ('and', 'CC'), ('fellow', 'JJ'), ('citizens', 'NNS'), (':', ':'), ('Today', 'VB'), ('our', 'PRP$'), ('nation', 'NN'), ('lost', 'VBD'), ('a', 'DT'), ('beloved', 'VBN'), (',', ','), ('graceful', 'JJ'), (',', ','), ('courageous', 'JJ'), ('woman', 'NN'), ('who', 'WP'), ('called', 'VBD'), ('America', 'NNP'), ('to', 'TO'), ('its', 'PRP$'), ('founding', 'NN'), ('ideals', 'NNS'), ('and', 'CC'), ('carried', 'VBD'), ('on', 'IN'), ('a', 'DT'), ('noble', 'JJ'), ('dream', 'NN'), ('.', '.')]

[('Tonight', 'NN'), ('we', 'PRP'), ('are', 'VBP'), ('comforted', 'VBN'), ('by', 'IN'), ('the', 'DT'), ('hope', 'NN'), ('of', 'IN'), ('a', 'DT'), ('glad', 'JJ'), ('reunion', 'NN'), ('with', 'IN'), ('the', 'DT'), ('husband', 'NN'), ('who', 'WP'), ('was', 'VBD'), ('taken', 'VBN'), ('so', 'RB'), ('long', 'RB'), ('ago', 'RB'), (',', ','), ('and', 'CC'), ('we', 'PRP'), ('are', 'VBP'), ('grateful', 'JJ'), ('for', 'IN'), ('the', 'DT'), ('good', 'JJ'), ('life', 'NN'), ('of', 'IN'), ('Coretta', 'NNP'), ('Scott', 'NNP'), ('King', 'NNP'), ('.', '.')]

[('(', '('), ('Applause', 'NNP'), ('.', '.'), (')', ')')]

[('President', 'NNP'), ('George', 'NNP'), ('W.', 'NNP'), ('Bush', 'NNP'), ('reacts', 'VBZ'), ('to', 'TO'), ('applause', 'VB'), ('during', 'IN'), ('his', 'PRP$'), ('State', 'NNP'), ('of', 'IN'), ('the', 'DT'), ('Union', 'NNP'), ('Address', 'NNP'), ('at', 'IN'), ('the', 'DT'), ('Capitol', 'NNP'), (',', ','), ('Tuesday', 'NNP'), (',', ','), ('Jan', 'NNP'), ('.', '.')]The output should be a list of tuples, where the first element in the tuple is the word, and the second is the part of speech tag

At this point, we can begin to derive meaning, but there is still some work to do. The next topic that we’re going to cover is chunking, which is where we group words, based on their parts of speech, into hopefully meaningful groups.

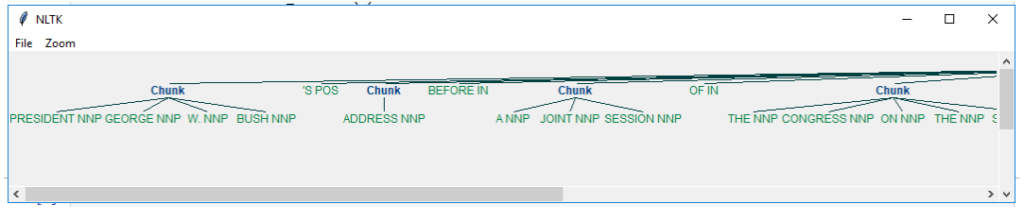

7. CHUNKING

Now that we know the parts of speech, we can do what is called chunking, and group words into hopefully meaningful chunks. One of the main goals of chunking is to group into what are known as “noun phrases.” These are phrases of one or more words that contain a noun, maybe some descriptive words, maybe a verb, and maybe something like an adverb. The idea is to group nouns with the words that are in relation to them.

+ = match 1 or more

? = match 0 or 1 repetitions.

* = match 0 or MORE repetitions

. = Any character except a new lineThe last things to note is that the part of speech tags are denoted with the “<” and “>” and we can also place regular expressions within the tags themselves, so account for things like “all nouns” (<N.*>)

import nltk

from nltk.corpus import state_union

from nltk.tokenize import PunktSentenceTokenizer

train_text = state_union.raw("2005-GWBush.txt")

sample_text = state_union.raw("2006-GWBush.txt")

custom_sent_tokenizer = PunktSentenceTokenizer(train_text)

tokenized = custom_sent_tokenizer.tokenize(sample_text)

def process_content():

try:

for i in tokenized:

words = nltk.word_tokenize(i)

tagged = nltk.pos_tag(words)

chunkGram = r"""Chunk: {<RB.?>*<VB.?>*<NNP>+<NN>?}"""

chunkParser = nltk.RegexpParser(chunkGram)

chunked = chunkParser.parse(tagged)

chunked.draw()

except Exception as e:

print(str(e))

process_content()

The main line here in question is:

chunkGram = r"""Chunk: {<RB.?>*<VB.?>*<NNP>+<NN>?}"""This line, broken down:

<RB.?>* = “0 or more of any tense of adverb,” followed by:

<VB.?>* = “0 or more of any tense of verb,” followed by:

+ = “One or more proper nouns,” followed by

? = “zero or one singular noun.”

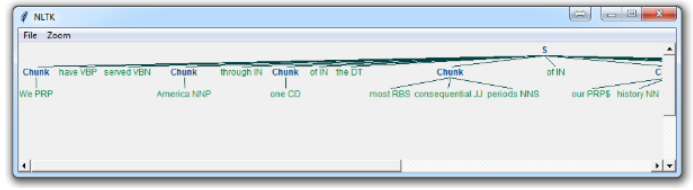

8. CHINKING

You may find that, after a lot of chunking, you have some words in your chunk you still do not want, but you have no idea how to get rid of them by chunking. You may find that chinking is your solution.

Chinking is a lot like chunking, it is basically a way for you to remove a chunk from a chunk. The chunk that you remove from your chunk is your chink.

The code is very similar, you just denote the chink, after the chunk, with }{ instead of the chunk’s {}.

import nltk

from nltk.corpus import state_union

from nltk.tokenize import PunktSentenceTokenizer

train_text = state_union.raw("2005-GWBush.txt")

sample_text = state_union.raw("2006-GWBush.txt")

custom_sent_tokenizer = PunktSentenceTokenizer(train_text)

tokenized = custom_sent_tokenizer.tokenize(sample_text)

def process_content():

try:

for i in tokenized[5:]:

words = nltk.word_tokenize(i)

tagged = nltk.pos_tag(words)

chunkGram = r"""Chunk: {<.*>+}

}<VB.?|IN|DT|TO>+{"""

chunkParser = nltk.RegexpParser(chunkGram)

chunked = chunkParser.parse(tagged)

chunked.draw()

except Exception as e:

print(str(e))

process_content()

Now, the main difference here is:

}<VB.?|IN|DT|TO>+{This means we’re removing from the chink one or more verbs, prepositions, determiners, or the word ‘to’.

Now that we’ve learned how to do some custom forms of chunking, and chinking, let’s discuss a built-in form of chunking that comes with NLTK, and that is named entity recognition.

9. NAME IDENTITY RECOGNITION

One of the most major forms of chunking in natural language processing is called “Named Entity Recognition.” The idea is to have the machine immediately be able to pull out “entities” like people, places, things, locations, monetary figures, and more.

This can be a bit of a challenge, but NLTK is this built in for us. There are two major options with NLTK’s named entity recognition: either recognize all named entities, or recognize named entities as their respective type, like people, places, locations, etc.

Here’s an example:

import nltk

from nltk.corpus import state_union

from nltk.tokenize import PunktSentenceTokenizer

train_text = state_union.raw("2005-GWBush.txt")

sample_text = state_union.raw("2006-GWBush.txt")

custom_sent_tokenizer = PunktSentenceTokenizer(train_text)

tokenized = custom_sent_tokenizer.tokenize(sample_text)

def process_content():

try:

for i in tokenized[5:]:

words = nltk.word_tokenize(i)

tagged = nltk.pos_tag(words)

namedEnt = nltk.ne_chunk(tagged, binary=True)

namedEnt.draw()

except Exception as e:

print(str(e))

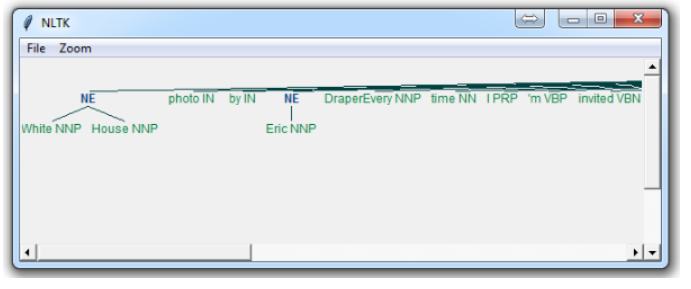

process_content()Here, with the option of binary = True, this means either something is a named entity, or not. There will be no further detail. The result is:

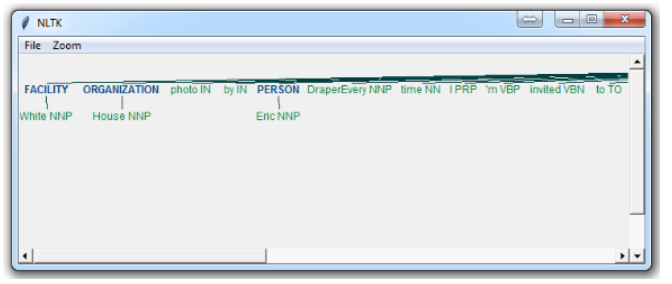

If you set binary = False, then the result is:

import nltk

from nltk.corpus import state_union

from nltk.tokenize import PunktSentenceTokenizer

train_text = state_union.raw("2005-GWBush.txt")

sample_text = state_union.raw("2006-GWBush.txt")

custom_sent_tokenizer = PunktSentenceTokenizer(train_text)

tokenized = custom_sent_tokenizer.tokenize(sample_text)

def process_content():

try:

for i in tokenized[5:]:

words = nltk.word_tokenize(i)

tagged = nltk.pos_tag(words)

namedEnt = nltk.ne_chunk(tagged, binary=False)

namedEnt.draw()

except Exception as e:

print(str(e))

process_content()

Immediately, you can see a few things. When Binary is False, it picked up the same things, but wound up splitting up terms like White House into “White” and “House” as if they were different, whereas we could see in the binary = True option, the named entity recognition was correct to say White House was part of the same named entity.

Depending on your goals, you may use the binary option how you see fit. Here are the types of Named Entities that you can get if you have binary as false:

NE Type and Examples:

ORGANIZATION – Georgia-Pacific Corp., WHO

PERSON – Eddy Bonte, President Obama

LOCATION – Murray River, Mount Everest

DATE – June, 2008-06-29

TIME – two fifty a m, 1:30 p.m.

MONEY – 175 million Canadian Dollars, GBP 10.40

PERCENT – twenty pct, 18.75 %

FACILITY – Washington Monument, Stonehenge

GPE – South East Asia, Midlothian

10. Lemmatization

A very similar operation to stemming is called lemmatizing. The major difference between these is, as you saw earlier, stemming can often create non-existent words, whereas lemmas are actual words.

So, your root stem, meaning the word you end up with, is not something you can just look up in a dictionary, but you can look up a lemma.

Some times you will wind up with a very similar word, but sometimes, you will wind up with a completely different word. Let’s see some examples.

from nltk.stem import WordNetLemmatizer

lemmatizer = WordNetLemmatizer()

print(lemmatizer.lemmatize("cats"))

print(lemmatizer.lemmatize("cacti"))

print(lemmatizer.lemmatize("geese"))

print(lemmatizer.lemmatize("rocks"))

print(lemmatizer.lemmatize("python"))

print(lemmatizer.lemmatize("better", pos="a"))

print(lemmatizer.lemmatize("best", pos="a"))

print(lemmatizer.lemmatize("run"))

print(lemmatizer.lemmatize("run",'v'))cat

cactus

goose

rock

python

good

best

run

run*Note: *Here, we’ve got a bunch of examples of the lemma for the words that we use. The only major thing to note is that lemmatize takes a part of speech parameter, “pos.” If not supplied, the default is “noun.” This means that an attempt will be made to find the closest noun, which can create trouble for you. Keep this in mind if you use lemmatizing!

11. CORPORA

The NLTK corpus is a massive dump of all kinds of natural language data sets that are definitely worth taking a look at.

Almost all of the files in the NLTK corpus follow the same rules for accessing them by using the NLTK module, but nothing is magical about them. These files are plain text files for the most part, some are XML and some are other formats, but they are all accessible by you manually, or via the module and Python. Let’s talk about viewing them manually.

Depending on your installation, your nltk_data directory might be hiding in a multitude of locations. To figure out where it is, head to your Python directory, where the NLTK module is. If you do not know where that is, use the following code:

import nltk

print(nltk.__file__)C:\Users\divya\Anaconda3\lib\site-packages\nltk\__init__.pyThe important blurb of code is:

if sys.platform.startswith('win'):

# Common locations on Windows:

path += [

str(r'C:\nltk_data'), str(r'D:\nltk_data'), str(r'E:\nltk_data'),

os.path.join(sys.prefix, str('nltk_data')),

os.path.join(sys.prefix, str('lib'), str('nltk_data')),

os.path.join(os.environ.get(str('APPDATA'), str('C:\\')), str('nltk_data'))

]

else:

# Common locations on UNIX & OS X:

path += [

str('/usr/share/nltk_data'),

str('/usr/local/share/nltk_data'),

str('/usr/lib/nltk_data'),

str('/usr/local/lib/nltk_data')

]There, you can see the various possible directories for the nltk_data. If you’re on Windows, chances are it is in your appdata, in the local directory. To get there, you will want to open your file browser, go to the top, and type in %appdata%

Next click on roaming, and then find the nltk_data directory. In there, you will have your corpora file. The full path is something like: C:\Users\yourname\AppData\Roaming\nltk_data\corpora

Within here, you have all of the available corpora, including things like books, chat logs, movie reviews, and a whole lot more.

Now, we’re going to talk about accessing these documents via NLTK. As you can see, these are mostly text documents, so you could just use normal Python code to open and read documents. That said, the NLTK module has a few nice methods for handling the corpus, so you may find it useful to use their methology. Here’s an example of us opening the Gutenberg Bible, and reading the first few lines:

from nltk.tokenize import sent_tokenize, PunktSentenceTokenizer

from nltk.corpus import gutenberg

# sample text

sample = gutenberg.raw("bible-kjv.txt")

tok = sent_tokenize(sample)

for x in range(5):

print(tok[x])[The King James Bible] The Old Testament of the King James Bible The First Book of Moses: Called Genesis 1:1 In the beginning God created the heaven and the earth. 1:2 And the earth was without form, and void; and darkness was upon the face of the deep. And the Spirit of God moved upon the face of the waters. 1:3 And God said, Let there be light: and there was light. 1:4 And God saw the light, that it was good: and God divided the light from the darkness.

12. WORDNET

WordNet is a lexical database for the English language, which was created by Princeton, and is part of the NLTK corpus.

You can use WordNet alongside the NLTK module to find the meanings of words, synonyms, antonyms, and more. Let’s cover some examples.

First, you’re going to need to import wordnet:

from nltk.corpus import wordnetThen, we’re going to use the term “program” to find synsets like so:

syns = wordnet.synsets("program")An example of a synset:

print(syns[0].name())plan.n.01

print(syns[0].lemmas()[0].name())plan

Definition of that first synset:

print(syns[0].definition())a series of steps to be carried out or goals to be accomplished

Examples of the word in use:

print(syns[0].examples())['they drew up a six-step plan', 'they discussed plans for a new bond issue']

Next, how might we discern synonyms and antonyms to a word? The lemmas will be synonyms, and then you can use .antonyms to find the antonyms to the lemmas. As such, we can populate some lists like

synonyms = []

antonyms = []

for syn in wordnet.synsets("good"):

for l in syn.lemmas():

synonyms.append(l.name())

if l.antonyms():

antonyms.append(l.antonyms()[0].name())

print(set(synonyms)){'respectable', 'upright', 'good', 'beneficial', 'honest', 'expert', 'unspoiled', 'estimable', 'adept', 'safe', 'goodness', 'thoroughly', 'soundly', 'effective', 'skillful', 'near', 'unspoilt', 'just', 'commodity', 'full', 'skilful', 'dependable', 'in_force', 'serious', 'salutary', 'sound', 'undecomposed', 'ripe', 'secure', 'in_effect', 'proficient', 'well', 'right', 'honorable', 'trade_good', 'practiced', 'dear'}

{'evilness', 'evil', 'bad', 'ill', 'badness'}

As you can see, we got many more synonyms than antonyms, since we just looked up the antonym for the first lemma, but you could easily balance this buy also doing the exact same process for the term “bad.”

Next, we can also easily use WordNet to compare the similarity of two words and their tenses, by incorporating the Wu and Palmer method for semantic related-ness.

Let’s compare the noun of “ship” and “boat:”

w1 = wordnet.synset('ship.n.01')

w2 = wordnet.synset('boat.n.01')

print(w1.wup_similarity(w2))0.9090909090909091

Compare ship and car:

w1 = wordnet.synset('ship.n.01')

w2 = wordnet.synset('car.n.01')

print(w1.wup_similarity(w2))0.6956521739130435

Compare ship and cat :

w1 = wordnet.synset('ship.n.01')

w2 = wordnet.synset('cat.n.01')

print(w1.wup_similarity(w2))0.32

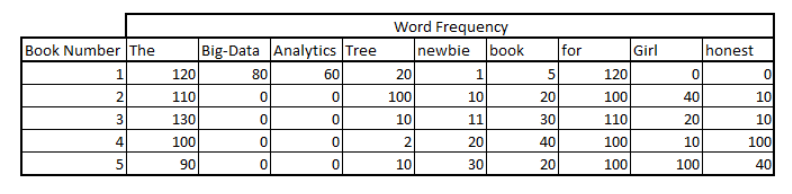

13. TEXT CLASSIFICATION

Now that we’re comfortable with NLTK, let’s try to tackle text classification. The goal with text classification can be pretty broad. Maybe we’re trying to classify text as about politics or the military. Maybe we’re trying to classify it by the gender of the author who wrote it. A fairly popular text classification task is to identify a body of text as either spam or not spam, for things like email filters. In our case, we’re going to try to create a sentiment analysis algorithm.

To do this, we’re going to start by trying to use the movie reviews database that is part of the NLTK corpus. From there we’ll try to use words as “features” which are a part of either a positive or negative movie review. The NLTK corpus movie_reviews data set has the reviews, and they are labeled already as positive or negative. This means we can train and test with this data. First, let’s wrangle our data.

import nltk

import random

from nltk.corpus import movie_reviews

documents = [(list(movie_reviews.words(fileid)), category)

for category in movie_reviews.categories()

for fileid in movie_reviews.fileids(category)]

random.shuffle(documents)

print(documents[1])

all_words = []

for w in movie_reviews.words():

all_words.append(w.lower())

all_words = nltk.FreqDist(all_words)

print(all_words.most_common(15))

print(all_words["stupid"])(['lean', ',', 'mean', ',', 'escapist', 'thrillers', 'are', 'a', 'tough', 'product', 'to', 'come', 'by', '.', 'most', 'are', 'unnecessarily', 'complicated', ',', 'and', 'others', 'have', 'no', 'sense', 'of', 'expediency', '--', 'the', 'thrill', '-', 'ride', 'effect', 'gets', 'lost', 'in', 'the', 'cumbersome', 'plot', '.', 'perhaps', 'the', 'ultimate', 'escapist', 'thriller', 'was', 'the', 'fugitive', ',', 'which', 'featured', 'none', 'of', 'the', 'flash', '-', 'bang', 'effects', 'of', 'today', "'", 's', 'market', 'but', 'rather', 'a', 'bread', '-', 'and', '-', 'butter', ',', 'textbook', 'example', 'of', 'what', 'a', 'clever', 'script', 'and', 'good', 'direction', 'is', 'all', 'about', '.', 'the', 'latest', 'tony', 'scott', 'movie', ',', 'enemy', 'of', 'the', 'state', ',', 'doesn', "'", 't', 'make', 'it', 'to', 'that', 'level', '.', 'it', "'", 's', 'a', 'true', 'nineties', 'product', 'that', 'runs', 'like', 'greased', 'lightning', 'through', 'a', 'maze', 'of', 'cell', 'phones', 'and', 'laptop', 'computers', ',', 'without', 'looking', 'back', '.', 'although', 'director', 'scott', 'has', 'made', 'missteps', 'in', 'the', 'past', ',', 'such', 'as', 'the', 'lame', 'thriller', 'the', 'fan', ',', 'he', "'", 's', 'generated', 'a', 'good', 'deal', 'of', 'energy', 'in', 'pictures', 'like', 'crimson', 'tide', 'and', 'top', 'gun', '.', 'that', 'vibrant', 'spirit', 'is', 'present', 'here', ',', 'shown', 'in', 'well', '-', 'timed', 'and', 'carefully', 'planned', 'chase', 'scenes', 'that', 'give', 'the', 'movie', 'an', 'aura', 'of', 'sheer', 'speed', '.', 'enemy', 'of', 'the', 'state', 'also', 'features', 'an', 'unprecedented', 'use', 'of', 'amazing', 'cinematography', '--', 'director', 'of', 'photography', 'daniel', 'mindel', 'throws', 'a', 'staggering', 'amount', 'of', 'different', 'views', ',', 'angles', ',', 'lenses', ',', 'and', 'film', 'stocks', 'at', 'the', 'audience', 'that', 'goes', 'a', 'long', 'way', 'toward', 'involving', 'the', 'audience', 'in', 'the', 'movie', '.', 'enemy', 'is', 'truly', 'a', 'visual', 'experience', ',', 'and', 'that', "'", 's', 'only', 'one', 'of', 'the', 'reasons', 'it', "'", 's', 'such', 'a', 'fun', 'watch', '.', 'the', 'movie', 'lights', 'up', 'with', 'an', 'aging', 'senator', 'visited', 'by', 'nsa', 'deputy', 'chief', 'thomas', 'reynolds', '(', 'jon', 'voight', ')', '.', 'reynolds', 'wants', 'a', 'new', 'communications', 'act', 'passed', 'to', 'allow', 'the', 'government', 'free', 'reign', 'in', 'the', 'use', 'of', 'surveilance', 'equipment', ',', 'but', 'the', 'senator', 'plans', 'to', 'bury', 'the', 'bill', 'in', 'committee', '.', 'reynolds', 'has', 'the', 'senator', 'offed', ',', 'but', 'not', 'before', 'the', 'murder', 'is', 'caught', 'on', 'a', 'naturalist', "'", 's', 'camera', '.', 'by', 'an', 'extremist', 'chain', 'of', 'events', ',', 'the', 'tape', 'ends', 'up', 'in', 'labor', 'lawyer', 'robert', 'dean', '(', 'will', 'smith', ')', "'", 's', 'posession', ',', 'and', 'it', "'", 's', 'not', 'long', 'before', 'he', "'", 's', 'running', 'from', 'reynolds', "'", 'cronies', '.', 'it', "'", 's', 'only', 'with', 'the', 'help', 'of', 'an', 'ex', '-', 'spook', 'named', 'brill', '(', 'gene', 'hackman', ')', 'that', 'dean', 'is', 'able', 'to', 'get', 'to', 'the', 'bottom', 'of', 'things', '.', 'the', 'acting', 'is', 'top', 'notch', ',', 'and', 'the', 'three', 'principles', '-', 'smith', ',', 'hackman', ',', 'and', 'voight', '-', 'are', 'generally', 'more', 'mature', 'and', 'excellent', 'all', 'around', '.', 'smith', 'puts', 'aside', 'the', 'wisecracking', 'act', 'and', 'becomes', 'a', 'normal', 'human', 'being', ';', 'voight', 'tones', 'down', 'the', 'amount', 'of', 'sneer', 'he', 'puts', 'into', 'his', 'character', 'for', 'greater', 'ominpotence', ';', 'and', 'hackman', 'is', 'simply', 'over', 'the', 'top', 'in', 'the', 'mysterioso', 'role', '.', 'smith', "'", 's', 'regular', 'joe', 'comes', 'off', 'particularly', 'well', ',', 'as', 'he', 'runs', 'from', 'authorties', 'for', 'reasons', 'that', 'he', 'knows', 'not', '.', 'the', 'supports', 'are', 'also', 'in', 'fine', 'form', ',', 'lending', 'credibility', 'to', 'the', 'main', 'roles', 'and', 'advancing', 'the', 'plot', 'in', 'key', 'areas', '.', 'this', 'is', ',', 'for', 'the', 'holiday', 'crowd', ',', 'the', 'hot', 'ticket', ';', 'as', 'well', 'as', 'anyone', 'looking', 'for', 'a', 'serving', 'of', 'genuine', 'action', 'in', 'a', 'market', 'that', 'is', 'otherwise', 'lacking', '.'], 'pos')

[(',', 77717), ('the', 76529), ('.', 65876), ('a', 38106), ('and', 35576), ('of', 34123), ('to', 31937), ("'", 30585), ('is', 25195), ('in', 21822), ('s', 18513), ('"', 17612), ('it', 16107), ('that', 15924), ('-', 15595)]

253

It may take a moment to run this script, as the movie reviews dataset is somewhat large. Let’s cover what is happening here.

After importing the data set we want, you see:

documents = [(list(movie_reviews.words(fileid)), category)

for category in movie_reviews.categories()

for fileid in movie_reviews.fileids(category)]Basically, in plain English, the above code is translated to: In each category (we have pos or neg), take all of the file IDs (each review has its own ID), then store the word_tokenized version (a list of words) for the file ID, followed by the positive or negative label in one big list.

Next, we use random to shuffle our documents. This is because we’re going to be training and testing. If we left them in order, chances are we’d train on all of the negatives, some positives, and then test only against positives. We don’t want that, so we shuffle the data.

Then, just so you can see the data you are working with, we print out documents[1], which is a big list, where the first element is a list the words, and the 2nd element is the “pos” or “neg” label.

Next, we want to collect all words that we find, so we can have a massive list of typical words. From here, we can perform a frequency distribution, to then find out the most common words. As you will see, the most popular “words” are actually things like punctuation, “the,” “a” and so on, but quickly we get to legitimate words. We intend to store a few thousand of the most popular words, so this shouldn’t be a problem.

print(all_words.most_common(15))[(',', 77717), ('the', 76529), ('.', 65876), ('a', 38106), ('and', 35576), ('of', 34123), ('to', 31937), ("'", 30585), ('is', 25195), ('in', 21822), ('s', 18513), ('"', 17612), ('it', 16107), ('that', 15924), ('-', 15595)]

The above gives you the 15 most common words. You can also find out how many occurences a word has by doing:

print(all_words["stupid"])253

Next up, we’ll begin storing our words as features of either positive or negative movie reviews.

14. CONVERTING WORDS TO FEATURES

we are compiling the feature lists of words from positive reviews and words from the negative reviews to hopefully see trends in specific types of words in positive or negative reviews.

import nltk

import random

from nltk.corpus import movie_reviews

documents = [(list(movie_reviews.words(fileid)), category)

for category in movie_reviews.categories()

for fileid in movie_reviews.fileids(category)]

random.shuffle(documents)

all_words = []

for w in movie_reviews.words():

all_words.append(w.lower())

all_words = nltk.FreqDist(all_words)

word_features = list(all_words.keys())[:3000]Mostly the same as before, only with now a new variable, word_features, which contains the top 3,000 most common words. Next, we’re going to build a quick function that will find these top 3,000 words in our positive and negative documents, marking their presence as either positive or negative:

def find_features(document):

words = set(document)

features = {}

for w in word_features:

features[w] = (w in words)

return featuresNext, we can print one feature set like: