Table of contents

- Introduction

- Problem statement

- ML formulation

- Performance Metric

- Understanding the data

- Data Preparation

- Modeling

- Training

- Prediction of segmentation maps on test data

- Future Works

- References

1. Introduction

What is Object Detection?

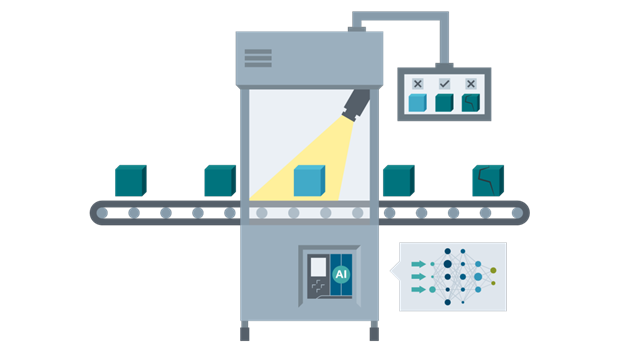

Given an image, we humans can identify the objects present in that image. For example, we can detect whether the image has a car, trees, people, etc. If we can analyze the images and detect the objects, can we also teach machines to do the same?

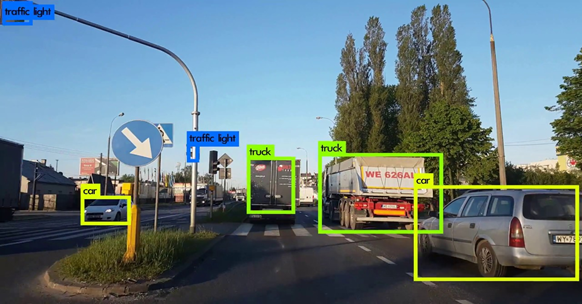

The answer is yes. With the rise of deep learning and computer vision, we can automate object detection. We can build deep learning and computer vision models, that can detect and locate objects, calculate the distance between them, predict their future stages, etc. Object detection has a wide range of applications in computer vision and machine learning. Object tracking, CCTV surveillance, human activity recognition, and even self-driving cars make use of this technology. To understand it better, consider the below image.

The figure shows the object detection of a road traffic image as looking from a vehicle. Here we can see that it is detecting other vehicles, traffic signals, etc. If the vehicle is a self-driving car, it should be able to detect the driving path, other vehicles, pedestrians, traffic signals, etc for smooth and safe driving.

If you want to know more about object detection you can refer here.

Now that we have understood object detection, let us move to a slightly advanced technique called image segmentation.

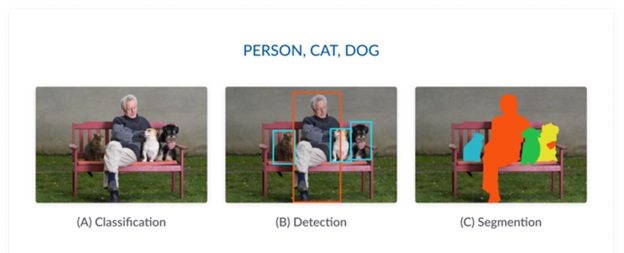

We can easily understand the difference between object detection and image segmentation by analyzing the figure below.

Both methods try to identify and locate the objects in an image. In object detection, this is achieved using bounding boxes. The algorithm or model will locate the objects by drawing a rectangle bounding box around them. In image segmentation, each pixel of the image is annotated. That means, given an image, the segmentation model tries to do the pixel-wise classification by classifying all the pixels of an image into meaningful classes of objects. This is also known as a dense prediction because it predicts the meaning of each pixel by identifying and understanding what object they belong to.

“The return format of image Segmentation is called a mask: an image that has the same size as the original image, but for each pixel, it has simply a boolean indicating whether the object is present or not present.”

We will be using this technique in this case study. To know more about image segmentation, you can refer to this.

Now we got an idea about object detection and image segmentation. Let us move further and understand the problem statement.

2. Problem Statement

We are given a set of images of some products. Some of the products are defective and some are not. Given the image of a product, we need to detect if it is defective or not. We also need to locate this defect.

3. ML Formulation

The problem can be formulated as an image segmentation task. Given an image of a product, we need to plot the segmentation mask for that. If the product is defective, the segmentation map should be able to locate that defect.

4. Performance metric

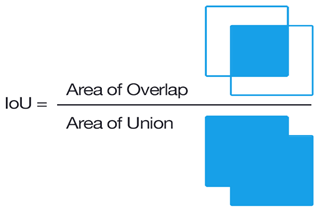

One of the most commonly used metrics in segmentation problems is the Intersection-Over-Union (IoU) score. Refer the below image, which clearly shows how IoU score is calculated.

IoU is the area of overlap between the predicted segmentation and the true segmentation divided by the area of union between the predicted segmentation and the original segmentation.

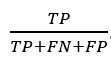

We can also write IoU score as :

You can refer here more more information

This metric ranges from 0 to 1. Iou score of 1 indicates a perfect overlap and an IoU score of 0 indicates no overlap at all.

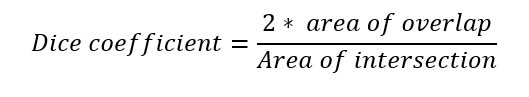

The loss function used in this case study is Dice loss. Dice loss can can be thought of as 1-Dice coefficient where Dice coefficient is defined as,

You can read more about these metrics here.

5. Understanding the data

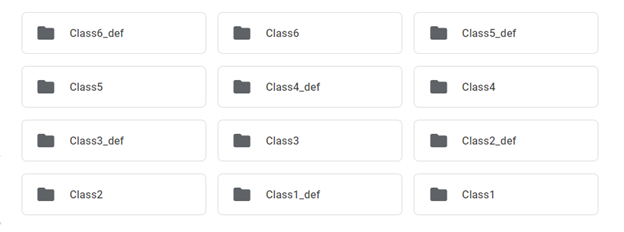

The dataset contains two folders – train and test. The train set consists of six classes of images. Each class of images was separated into two folders, of which one folder contained 1000 non-defective images and the other contained 130 defective images. The below figure shows the folders inside the train folder.

The folder name ending with “_def” contains the defective images of the corresponding class, and those without “_def” represent the non-defective images.

The test folder contains a set of 120 defective images whose segmentation map is to be predicted.

6. Data Preparation

6.1 Preparing the image data and segmentation masks

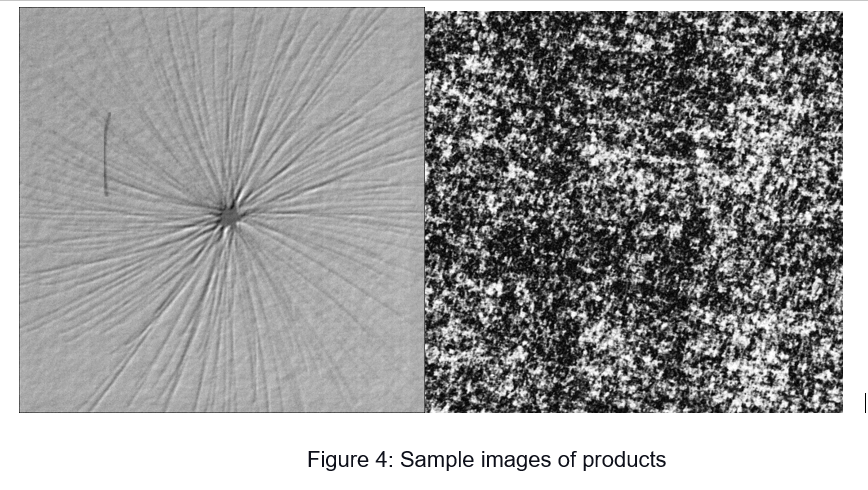

Now we need to prepare the image data and the corresponding segmentation mask for each image. We have the images divided into twelve folders. Let us see some of the images.

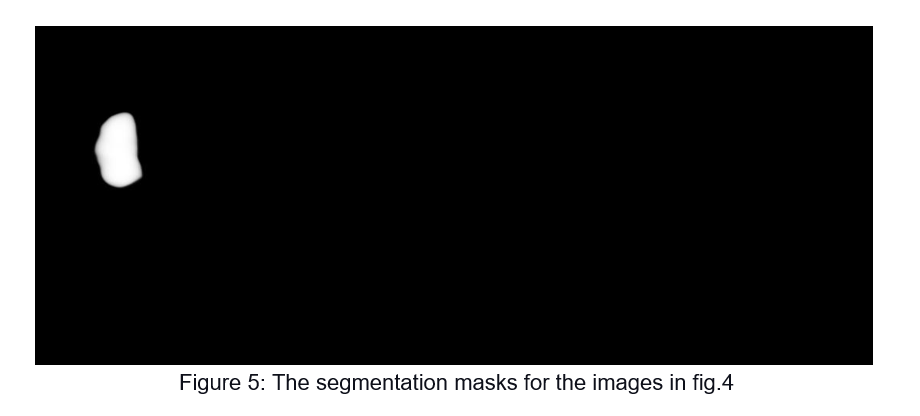

The first image represents a defective product and the second one represents a non-defective image. Now we need to prepare the segmentation maps for these images. The segmentation map should detect the defective parts in the image. For the above images, the expected segmentation maps will be like this.

We can see that in the first image, the elliptical region represents the detective part. The second image is blank because it does not have a defect.

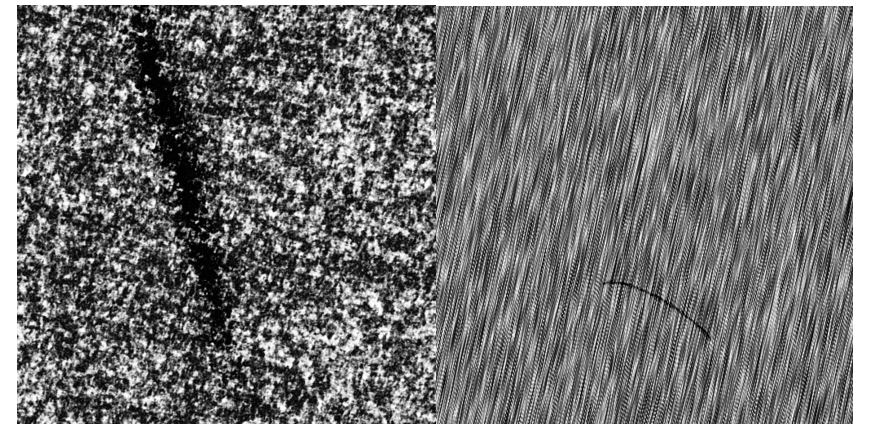

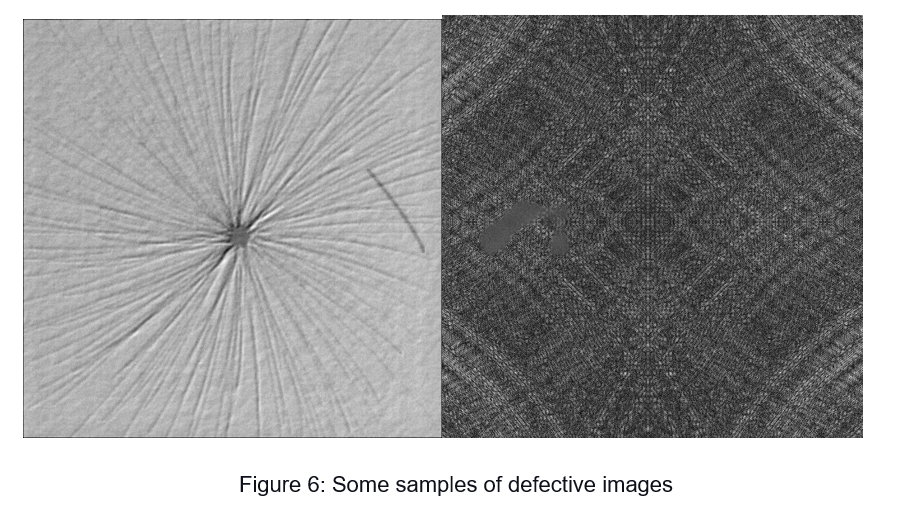

Let us analyze some more defective images.

We can see that the defects appear as curves or lines in the image. Therefore, to mark these regions as defects, we can make use of ellipses.

But how do we prepare the segmentation mask? Does it require manual labeling?

Fortunately, we are given another file that contains information about the segmentation mask.

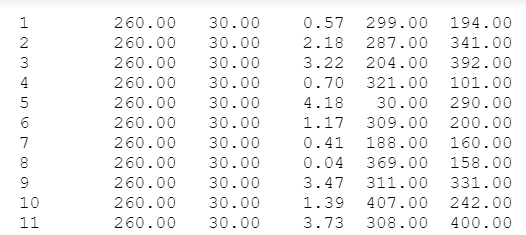

Each row contains information about the masked region of an image. Each column represents the following: filename of the image, the semi-major axis of the ellipse, the semi-minor axis of the ellipse, the rotation angle of the ellipse, x-position of the center of the ellipsoid, and y-position of the center of the ellipsoid.

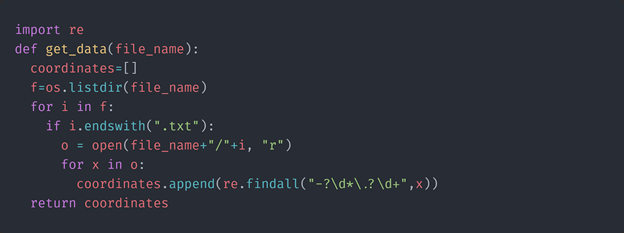

This data for drawing the ellipse is obtained using the get_data function as shown below

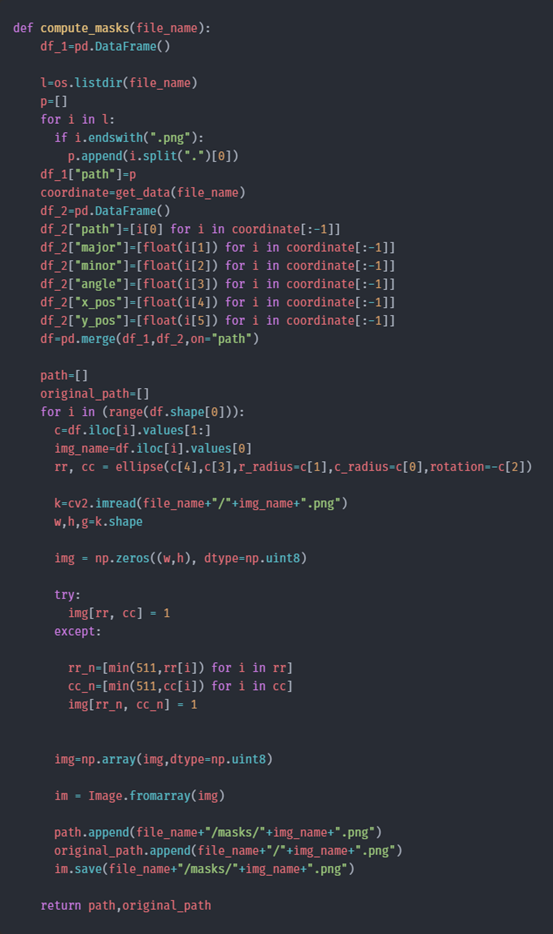

We can use this information and draw an elliptical segmentation mask using the skimage function.

It is to be noted that this is available only for defective images. For non-defective images, we need to create blank images as the segmentation masks.

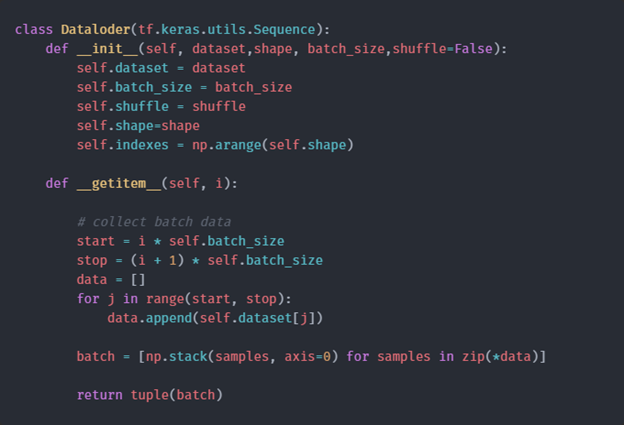

6.2 Loading the data

The structured data is obtained in the form as shown below.

The column “images” contains the full file path for each image and the “mask” column contains the corresponding mask images.

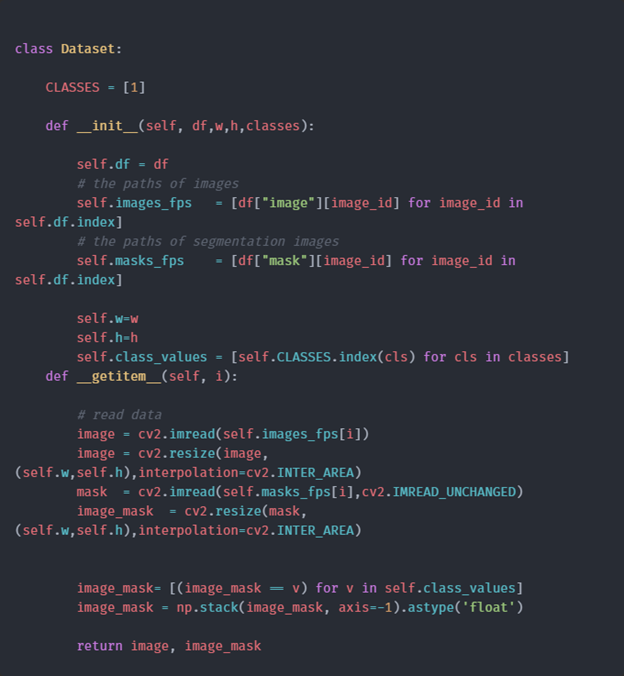

The next step is to load data.

7. Modeling

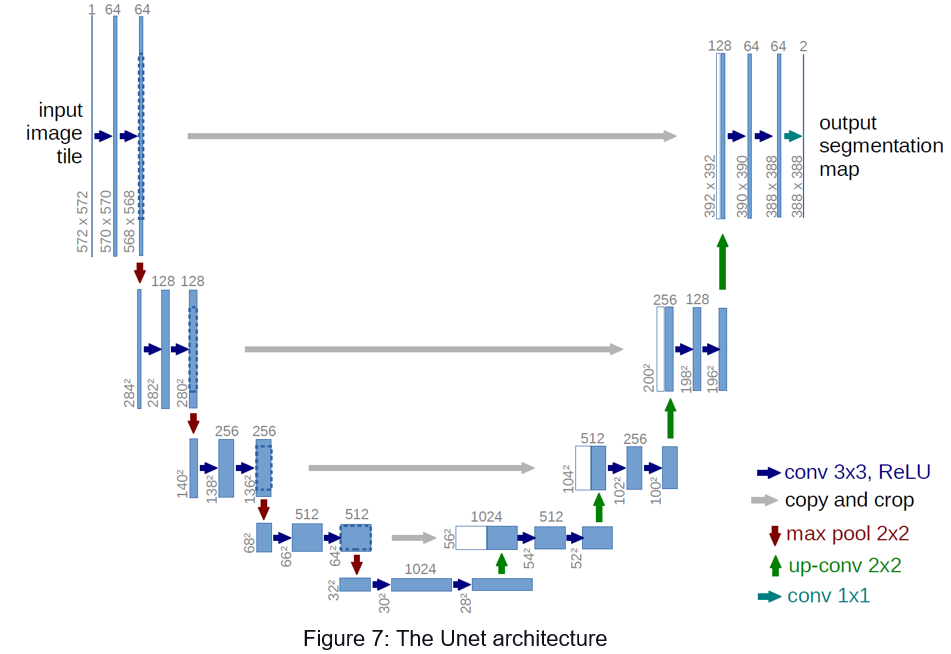

Now that we got all the data, the next step is to find a model that can generate the segmentation masks for images. Let me introduce the UNet model, which is very popular for image segmentation tasks.

UNet architecture contains two paths- the contracting path and the expanding path. The below figure will give a better understanding about the Unet architecture.

The model structure resembles the english letter “U” and hence the name Unet. The left side of the model contains the contraction path (also called the encoder) and it helps to capture the context in the image. The encoder is just a traditional stack of convolutional and max-pooling layers. Here we can see that the pooling layers reduces the height and width of the image and increases the depth or number of channels. At the end of the contraction path, the model will understand the shapes, patterns, edges,etc which are present in the image, but it loses the information of “where” it is present.

Since our problem is to obtain the segmentation maps for images, the information we obtain from the contracting path alone will not be sufficient. We need a high resolution image as the output in which all pixels are classified.

“If we use a regular convolutional network with pooling layers and dense layers, we will lose the “WHERE” information and only retain the “WHAT” information which is not what we want. In case of segmentation we need both “WHAT” as well as “WHERE” information.” So we need to upsample the image to retain the “where” information.This Is done in the expanding path which is on the right side. The expanding path (also called the decoder) is used for the localization of the captured context using upsampling techniques. There are various upsampling techniques like bilinear interpolation, nearest neighbor approach, transposed convolution, etc. If you are interested to read more about upsampling techniques, you can refer to this.

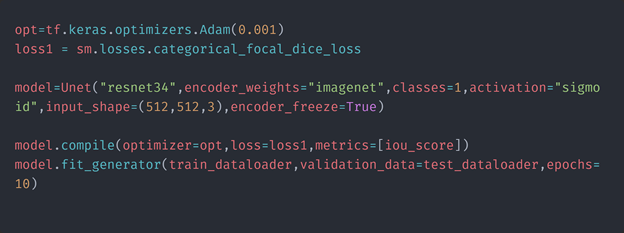

8. Training

Now, we have prepared all the data for training, and the model is also decided. Now let us train the model.

Since, the number of non-defective images are much higher than the defective images, we are taking only a sample from the non-defective images for better results. The model was compiled using adam optimizer and we used the dice loss as the loss function. The performance metric used was iou score.

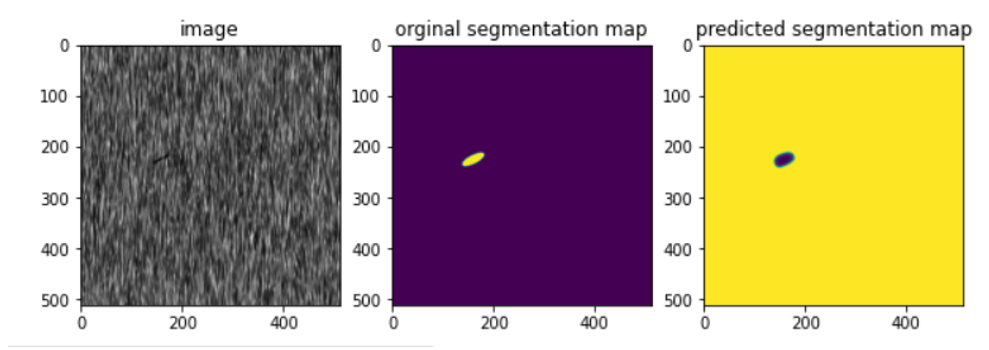

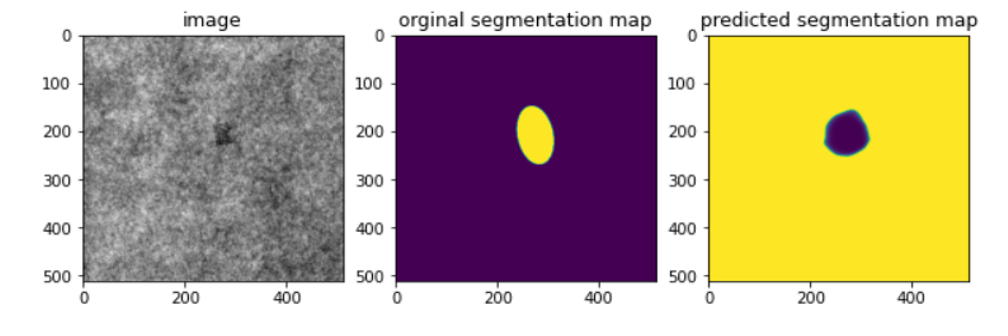

After 10 epochs, we are able to achieve an iou score of 0.98 and a dice loss of 0.007 which is pretty good. Let us see the segmentation maps of some images.

We can see that the model is able to predict the segmentation maps similar to the original segmentation maps.

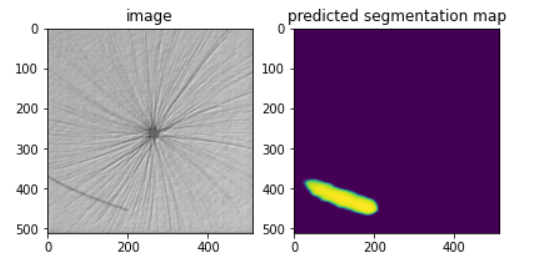

9. Prediction of segmentation maps on test data

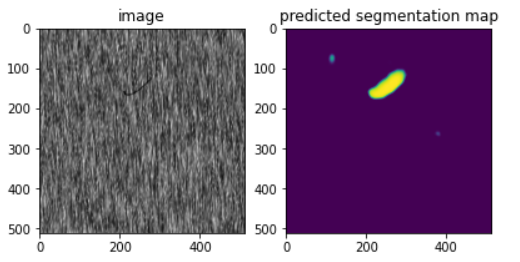

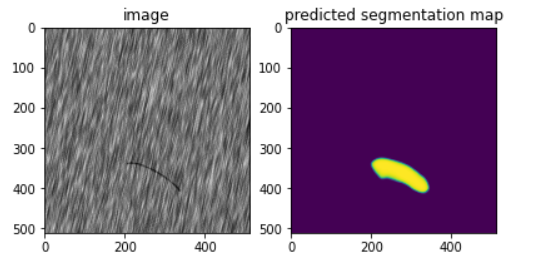

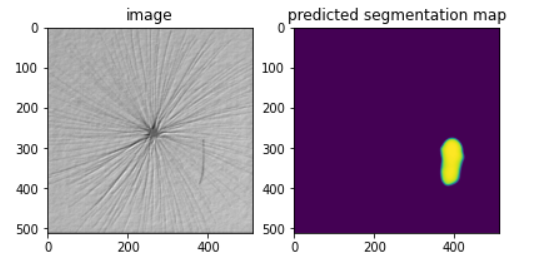

Now let us try to solve the problem at hand, which is to predict and plot the segmentation masks of the test images. The below figure shows the predicted segmentation maps for some of the test images.

We can see that the model gave a good test performance and was able to detect the defects in the test images.

For any queries or suggestions, you can contact me through LinkedIn.

10. Future works

As mentioned above, the number of defective images were very low as compared to non-defective images. So we can improve the training by applying upsampling and augmentation techniques on the defective images.